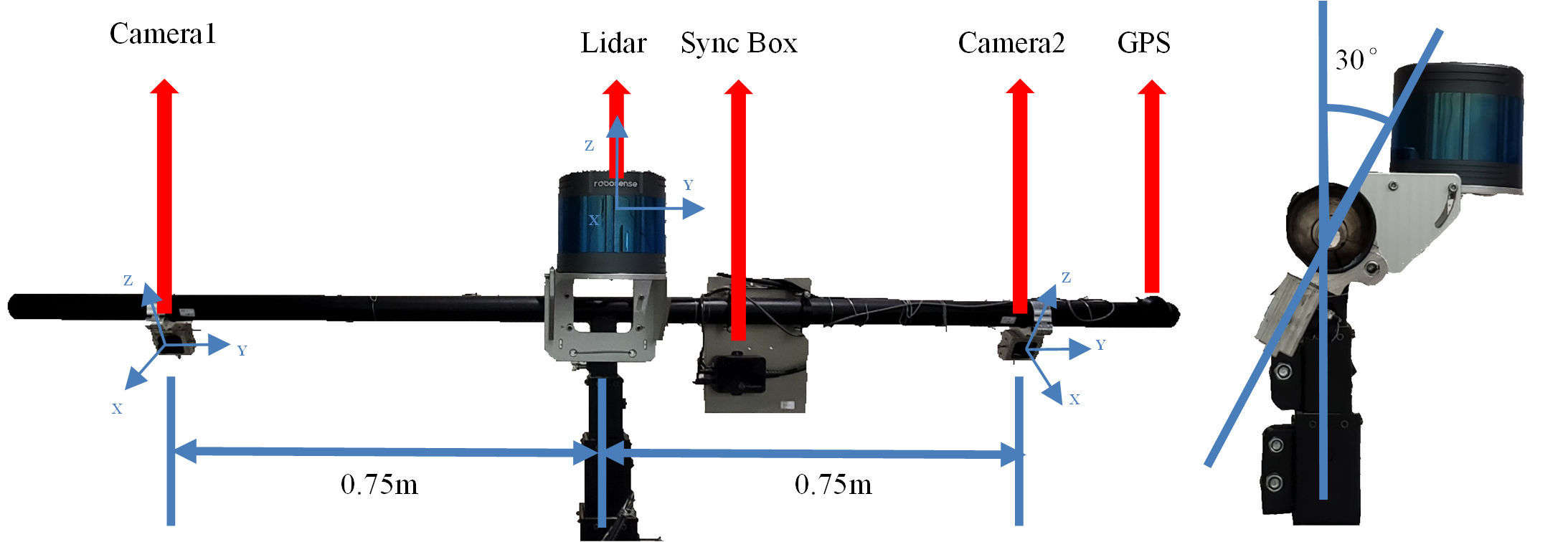

Below are the detail parameters for the sensors.

| sensors | Information |

| Robosensor Ruby-Lite Lidar |

80 beams, 10Hz detect, range: 230m, single echo mode, hFOV: 0°-360°, vFOV: -25°-15°, angle resolution: 0.2° |

| Sensing-SG5 color camera |

30Hz, 5.44MP, CMOS: Sony IMX490RGGB, rolling shutter, HDR dynamic range: 120dB, resolution: 1920×1080 |

| BS-280 GPS |

Time error: less than 1 us |

└─IPS300+_detection

├─calib

│ calib_file.txt

│ README.txt

├─data

│ ├─PCD_COM_ROI

# Pointclouds from two IPUs after preprocess our article. (The labeling work is under these pointclouds)

│ ├─IPU1

│ │ ├─IPU1_cam1

# Raw images (have distort) of each camera.

│ │ ├─IPU1_cam2

│ │ ├─IPU1_pcd

# Raw pointclouds (removed None) of each Lidar.

│ │ └─IPU1_cam1_undistort

# Undistort images in jpg format.

│ └─IPU2

│ ├─IPU2_cam1

│ ├─IPU2_cam2

│ ├─IPU2_cam1_undistort

│ └─IPU2_pcd

├─label

│ ├─json

# Label in json format

│ ├─txt

# Label in txt format

│ └─README.txt

└─README.txt

└─IPS300+_raw

│ README.txt

├─car_raw

│ turned_left.7z

│ went_straight.7z

├─RSU1_raw

│ │ 2020-12-16-17-27-48.7z.001

│ │ 2020-12-16-17-27-48.7z.002

│ │ 2020-12-16-17-27-48.7z.003

│ │ 2020-12-16-17-27-48.7z.004

│ │ 2020-12-16-17-34-57.7z.001

│ │ 2020-12-16-17-34-57.7z.002

│ │ 2020-12-16-17-34-57.7z.003

│ │ 2020-12-16-17-41-38.7z

│ │ 2020-12-16-17-44-50.7z.001

│ │ 2020-12-16-17-44-50.7z.002

│ │ 2020-12-16-17-44-50.7z.003

│ │ 2020-12-16-17-44-50.7z.004

│ └─2020-12-16-17-50-21.7z

└─RSU2_raw

│ 2020-12-16-17-27-45.7z

│ 2020-12-16-17-34-55.7z

│ 2020-12-16-17-41-29.7z

│ 2020-12-16-17-44-49.7z

└─2020-12-16-17-50-23.7z

└─IPS300+_tracking

├─01

│ ├─README.txt

│ ├─label

│ │ ├─json

│ │ │ 000000_LABEL.json

# Label in json format.

│ │ ├─txt

│ │ │ 000000_LABEL.txt

# Label in txt format.

│ │ └─README.txt

│ └─data

│ ├─PCD_COM_ROI

│ ├─IPU1

│ │ ├─IPU1_cam1

# Raw images (have distort) of each camera.

│ │ ├─IPU1_cam2

│ │ ├─IPU1_pcd

# Raw pointclouds (removed None) of each Lidar.

│ │ └─IPU1_cam1_undistort

# Undistort images in jpg format.

│ └─IPU2

│ ├─IPU2_cam1

│ ├─IPU2_cam2

│ ├─IPU2_cam1_undistort

│ └─IPU2_pcd

├─...............

└─README.txt

The IPS300+ data is published under CC BY-NC-SA 4.0 license. You may need to send an email to whn19@mails.tsinghua.edu.cn to obtain the password for download.

Thanks to Datatang(Stock Code : 831428) for providing us with professional data annotation services. Datatang is the world’s leading AI data service provider. We have accumulated numerous training datasets and provide on-demand data collection and annotation services. Our Data++ platform could greatly reduce data processing costs by integrating automatic annotation tools. Datatang provides high-quality training data to 1,000+ companies worldwide and helps them improve the performance of AI models.